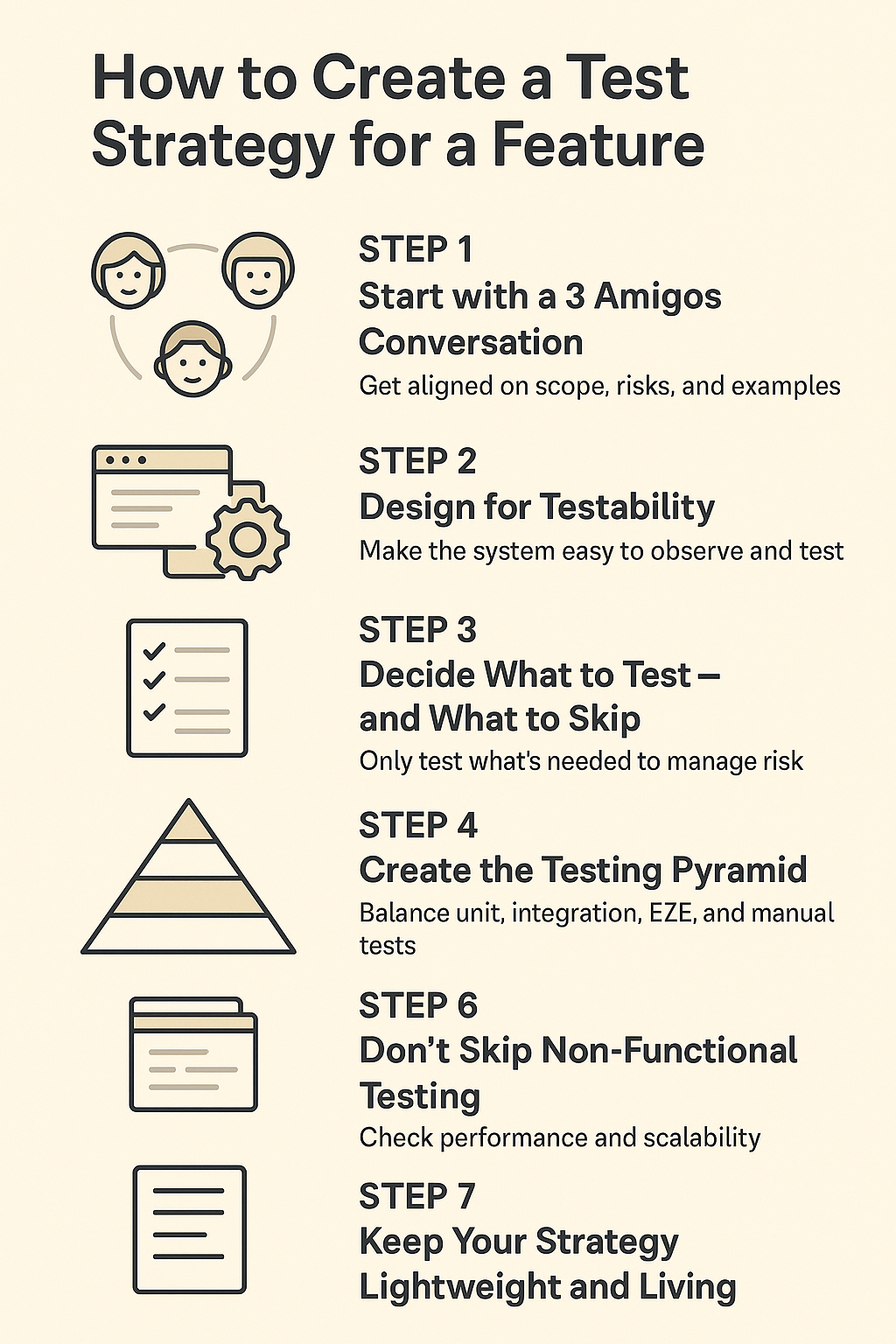

Crafting a Feature Test Strategy That Actually Works

By Gary Worthington, More Than Monkeys

Testing isn’t, or shouldn’t be, just about ticking boxes. It’s about building confidence and delivering quality software efficiently. Crafting the right test strategy can feel tricky, but here’s a detailed practical guide to ensure nothing vital gets overlooked, without wasting everyone’s time.

Step 1: Align Early with a 3 Amigos Conversation

Before writing code or tests, hold a brief alignment meeting with Product, Engineering, and Testers. Clarify:

- What’s the real goal of the feature?

- Essential behaviours and scenarios - use real-world examples.

- Risks, edge cases, and assumptions that need validating.

- What defines success eg. “definition of done”?

Everyone should leave with a shared understanding of the feature’s intent and risks clearly outlined.

Step 2: Design for Testability

Great tests start with good design. You should aim for:

- Clear separation of concerns.

- Easy dependency injection.

- Predictable inputs and outputs.

- Minimal hidden side effects.

If your feature feels hard to test, reconsider the design. Good architecture simplifies testing and improves overall software quality.

Step 3: Decide What to Test, and What to Skip

Not every feature or scenario deserves the same level of testing. Here’s a practical way to decide:

- Logic-heavy code: Write Unit Tests to quickly verify complex logic.

- External service integrations: Implement Integration Tests to confirm interactions with external services.

- Critical user flows: Conduct End-to-End (E2E) Tests to validate essential workflows from start to finish.

- Complex or visual elements: Rely on Manual Exploratory Testing to uncover nuanced UI issues and usability problems.

- Performance-critical features: Run Performance Tests to ensure your system handles realistic load conditions.

- Unstable or experimental features: Use robust Observability and quick feedback mechanisms to swiftly detect and respond to issues.

Always prioritise testing based on potential risks and impacts rather than merely ticking boxes.

Step 4: Create Your Testing Pyramid

Use the Test Pyramid as a framework to visualise your testing distribution:

▲

End to End

Manual/Exploratory

Integration

Unit

Ensure you have more fast, stable tests (like unit tests) and fewer brittle tests (like E2E tests). Avoid the “ice cream cone” scenario where you hav too many slow UI tests with few foundational tests.

Step 5: Layer Your Tests Smartly

Unit Tests

Quick, deterministic tests for specific logic. Examples:

- Input validation (formats, sizes)

- Metadata handling

- Error paths

- Business logic (calculations, conditional logic)

- Utility functions (string manipulation, date/time conversions)

- State management (state transitions, initial states)

- Edge cases (boundary conditions, negative tests)

Integration Tests

Verify interactions with external services and databases. Examples:

- Successful API calls and expected responses

- Proper error handling

- Correct database transactions

E2E Tests

Validate key user journeys end-to-end. Examples:

- Main success paths (e.g., user sign-up, payment processing)

- Critical failure paths

Manual Exploratory

Capture nuanced UX and unforeseen issues. Examples:

- Visual inconsistencies

- Browser/device compatibility issues

- Accessibility and usability issues

Write just enough tests, at the right time, to catch meaningful issues early.

Step 6: Don’t Neglect Performance and Reliability

Always validate:

- Load handling under realistic user volumes.

- Response times and stability under stress.

Use tools like Locust, k6, Lighthouse, or your cloud provider’s monitoring tools to ensure your feature can perform reliably under real-world conditions.

Step 7: Keep Your Strategy Lean and Flexible

Avoid massive, rarely-read documents. Instead, create a concise strategy outline directly in your ticket or pull request:

- Define clearly the test scope and risks.

- Identify what’s in and out of testing scope.

- Regularly update it as your understanding evolves.

Example format:

## Test Strategy: File Upload Feature

**Scope**: File uploads, virus scans, tagging

**Test Focus**:

- Unit: Validation and metadata logic

- Integration: API calls (virus scanning, file storage)

- E2E: Primary success and failure paths

- Manual: UX and visual checks

- Performance: Concurrent upload scenarios

**Out of Scope**:

- Internal mechanics of third-party scan engine

- UI customisations post-upload

**Risks**:

- API timeouts

- Handling large file uploads

Treat your test strategy as a living document. It should be lightweight, useful, and adaptive.

Testing strategies shouldn’t be complicated rituals. They’re tools to help your team ship confidently and efficiently. Follow this practical approach, and you’ll spend less time on unnecessary tests, and more on building features your users love.

Gary Worthington is a software engineer, delivery consultant, and agile coach who helps teams move fast, learn faster, and scale when it matters. He writes about modern engineering, product thinking, and helping teams ship things that matter.

Through his consultancy, More Than Monkeys, Gary helps startups and scaleups improve how they build software — from tech strategy and agile delivery to product validation and team development.

Visit morethanmonkeys.co.uk to learn how we can help you build better, faster.

Follow Gary on LinkedIn for practical insights into engineering leadership, agile delivery, and team performance