Probabilistic Forecasting: How to Predict Delivery Dates Without Lying to Yourself or Your…

Probabilistic Forecasting: How to Predict Delivery Dates Without Lying to Yourself or Your Stakeholders

by Gary Worthington, More Than Monkeys

Most agile teams are still trying to answer the question “When will it be done?” using story points, gut feel, and optimism.

That’s not forecasting, that’s guesswork with a Gantt chart.

What if we stopped pretending we could predict the future by arguing over whether a story is a 3 or a 5, and instead used the data we have sitting around in <insert Jira type system here>?

That’s where probabilistic forecasting comes in. And once you get the hang of it, you’ll wonder why we ever thought summing up story points would be enough.

This article walks through what probabilistic forecasting actually is, how Monte Carlo simulations work, and how to set yourself up for meaningful, grown-up conversations about delivery dates, the kind that don’t fall apart the moment reality arrives.

First, What’s Wrong With Traditional Forecasting?

Here’s the problem: most teams still operate as if work happens linearly and predictably. They build roadmaps by slicing up epics into “days of effort,” sticking them into a spreadsheet, and declaring some version of “We’ll be done by Q3.”

But software delivery doesn’t work like that.

- Requirements change

- Teams lose people

- Unexpected complexity shows up

- Some stories are trivial, others are minefields

- Priorities shift

Linear plans are fragile because they’re built on averages and assumptions, not variation. And variation is everywhere in software.

Probabilistic forecasting flips the script. It embraces thisvariation. It says, “We know there’s uncertainty; let’s model it, not pretend it doesn’t exist.”

What Is Probabilistic Forecasting?

It’s a way of predicting future outcomes based on past performance, while accounting for uncertainty and variability in your system.

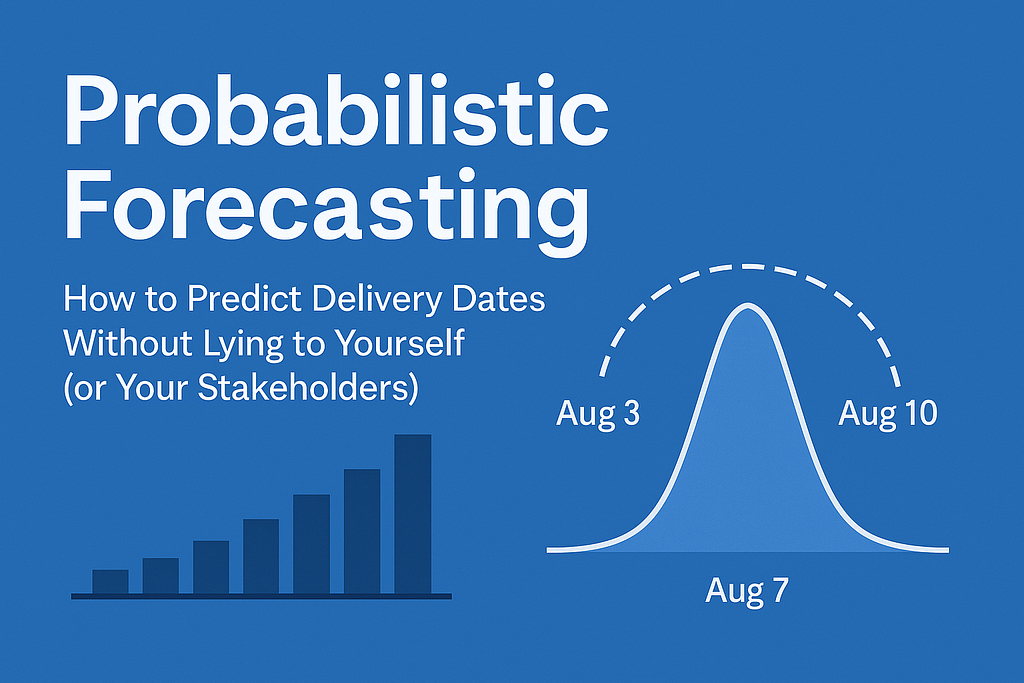

Instead of giving a single date like “We’ll be done on August 3rd,” you provide a range:

“There’s an 85% chance we’ll be done by August 3rd, and a 95% chance we’ll be done by August 7th.”

This gives stakeholders real insight into risk, not just false certainty.

And how do we generate those ranges?

Enter: Monte Carlo simulations.

Monte Carlo Simulations (Without the Maths Degree)

A Monte Carlo simulation is a way to answer “how long will this take?” by running thousands of what-if scenarios using your actual delivery data.

Let’s say you want to know how long it’ll take to finish 50 remaining stories. Here’s how the simulation might work:

- Take your historical throughput data; for example, how many stories your team completes per week

- Randomly sample from that data to simulate a week of delivery

- Subtract that number of stories from the backlog

- Repeat until the backlog is empty

- Do this 10,000 times

- Plot the results and calculate percentiles

The result? A distribution of possible outcomes, from optimistic to pessimistic. You can now say things like:

- 50% chance of finishing by 5 Aug

- 85% chance of finishing by 8 Aug

- 95% chance of finishing by 10 Aug

Instead of gambling your credibility on one date, you can have a mature discussion about confidence and trade-offs.

What You Need to Get Started

To run a meaningful forecast, you need:

- Consistent units of work

These could be user stories, tickets, tasks, whatever. But you can’t mix apples and donkeys. Keep your definition of “done” consistent. - Historical throughput data

You don’t need much, 20–30 data points is enough to start. Just record how many units of work are finished per week (or per day). - A target

- How many work items are left (e.g. “we have 30 stories to go”), or

- A time range (e.g. “what’s the likely output over the next 6 weeks?”)

From there, you can run simulations in a spreadsheet, use tools like Actionable Agile, or plug your data into something like Monte Carlo for Jira.

What About Story Points?

Here’s the fun part: you don’t need them to forecast.

Probabilistic forecasting works on count of items, not size. So long as your stories are relatively right-sized i.e. roughly the same effort, you can forecast based on throughput alone.

But that doesn’t mean you should ditch estimation altogether.

You should still estimate tickets; not to generate a timeline, but to spark the right conversations during refinement. If two engineers think a story is a 2 and another thinks it’s a 13, that’s a gift: it tells you there’s misalignment, assumptions, or complexity you haven’t uncovered yet.

Estimates are a thinking tool, not a forecasting input. Their real value is in helping the team understand the scope, ask better questions, and most importantly, spot when something needs to be sliced smaller.

Estimation invites scrutiny. It helps us ensure we’re dealing with consistently sized work, and that matters deeply, because consistent story size is what makes probabilistic forecasting reliable.

So: estimate to size work, not to schedule delivery.

Forecasting happens downstream, and it doesn’t care if your story was a 3 or a 5. It only cares whether it flowed.

Right-Sizing Work Makes Forecasting Easier

Let’s not skip over this: the quality of your forecast depends heavily on how well you slice your work.

If half your stories are three-day jobs and the other half are two-week monsters, your throughput data will be all over the place.

You’ll get better signal from your system if you make stories smaller and more uniform in complexity.

That doesn’t mean obsessing over slicing to the point of absurdity. But it does mean breaking down vague epics into concrete, achievable chunks. The kind of work that can move through the board in a few days.

Smaller, well-shaped stories = more consistent flow = better forecasts.

How to Talk About This With Stakeholders

Here’s the beauty of probabilistic forecasting: it helps you shift the conversation.

Instead of saying:

“We’ll be done by the end of the sprint.”

You can say:

“There’s a 90% chance we’ll complete these 10 stories this week based on our current flow.”

Instead of:

“The MVP will launch by the end of August.”

You say:

“We have 50 stories remaining. Based on our past 8 weeks, we’re delivering 12–16 stories per week. That gives us a high-confidence forecast of mid-August, with some margin into early September.

That’s not hedging, or guessing. That’s honesty, backed by data.

And you’ll quickly notice that when stakeholders get confidence in your forecasting, they stop pushing for false precision.

What Probabilistic Forecasting Is Not

Let’s clear up a few things.

It’s not:

- A silver bullet

- An excuse for sloppy delivery

- A way to avoid conversations about scope

You still need strong agile practices: solid refinement, cross-functional alignment, good flow discipline.

Probabilistic forecasting is just a lens to understand how work actually gets done, so you can make better calls.

TL;DR: Probabilistic Forecasting in Plain Terms

- Forecasting delivery doesn’t have to be guesswork

- Monte Carlo simulations use your real delivery data to predict outcomes

- You can run forecasts without story points

- Smaller, more consistent stories = better predictions

- Forecasts are about confidence, not certainty

Gary Worthington is a software engineer, delivery consultant, and agile coach who helps teams move fast, learn faster, and scale when it matters. He writes about modern engineering, product thinking, and helping teams ship things that matter.

Through his consultancy, More Than Monkeys, Gary helps startups and scaleups turn chaos into clarity — building web and mobile apps that ship early, scale sustainably, and deliver value without the guesswork.

Follow Gary on LinkedIn for practical insights into agile delivery, engineering culture, and building software teams that thrive.

(AI was used to improve syntax and grammar)